Another less obvious benefit of filter() is that it returns an iterable.

Get a short & sweet Python Trick delivered to your inbox every couple of days. Your home for data science. This is the power of the PySpark ecosystem, allowing you to take functional code and automatically distribute it across an entire cluster of computers. How to loop through each row of dataFrame in pyspark. I also think this simply adds threads to the driver node. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Commenting Tips: The most useful comments are those written with the goal of learning from or helping out other students. How can I access environment variables in Python? I have never worked with Sagemaker. Thanks for contributing an answer to Stack Overflow! We now have a task that wed like to parallelize. Azure Databricks: Python parallel for loop. DataFrames, same as other distributed data structures, are not iterable and can be accessed using only dedicated higher order function and / or SQL methods. Making statements based on opinion; back them up with references or personal experience.

Py4J isnt specific to PySpark or Spark. Signals and consequences of voluntary part-time? In case it is just a kind of a server, then yes. Title should have reflected that.

Spark itself runs job parallel but if you still want parallel execution in the code you can use simple python code for parallel processing to do it (this was tested on DataBricks Only link). Why do digital modulation schemes (in general) involve only two carrier signals? Again, the function being applied can be a standard Python function created with the def keyword or a lambda function. Iterating over dictionaries using 'for' loops, Create new column based on values from other columns / apply a function of multiple columns, row-wise in Pandas. You can learn many of the concepts needed for Big Data processing without ever leaving the comfort of Python. Or else, is there a different framework and/or Amazon service that I should be using to accomplish this? and iterate locally as shown above, but it beats all purpose of using Spark. Do you observe increased relevance of Related Questions with our Machine How do you run multiple programs in parallel from a bash script? ABD status and tenure-track positions hiring, Dealing with unknowledgeable check-in staff, Possible ESD damage on UART pins between nRF52840 and ATmega1284P, There may not be enough memory to load the list of all items or bills, It may take too long to get the results because the execution is sequential (thanks to the 'for' loop). Not well explained with the example then. There are higher-level functions that take care of forcing an evaluation of the RDD values. pyspark.rdd.RDD.mapPartition method is lazily evaluated. How to test multiple variables for equality against a single value? 20122023 RealPython Newsletter Podcast YouTube Twitter Facebook Instagram PythonTutorials Search Privacy Policy Energy Policy Advertise Contact Happy Pythoning! No spam ever. Plagiarism flag and moderator tooling has launched to Stack Overflow! Split a CSV file based on second column value. Then, youll be able to translate that knowledge into PySpark programs and the Spark API. Connect and share knowledge within a single location that is structured and easy to search. rev2023.4.5.43379. This command may take a few minutes because it downloads the images directly from DockerHub along with all the requirements for Spark, PySpark, and Jupyter: Once that command stops printing output, you have a running container that has everything you need to test out your PySpark programs in a single-node environment. For this tutorial, the goal of parallelizing the task is to try out different hyperparameters concurrently, but this is just one example of the types of tasks you can parallelize with Spark.

Fermat's principle and a non-physical conclusion. On azure the variable exists by default. RDDs are optimized to be used on Big Data so in a real world scenario a single machine may not have enough RAM to hold your entire dataset. I am using Azure Databricks to analyze some data. This means you have two sets of documentation to refer to: The PySpark API docs have examples, but often youll want to refer to the Scala documentation and translate the code into Python syntax for your PySpark programs. The core idea of functional programming is that data should be manipulated by functions without maintaining any external state. First, youll see the more visual interface with a Jupyter notebook. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Note: Be careful when using these methods because they pull the entire dataset into memory, which will not work if the dataset is too big to fit into the RAM of a single machine. @KamalNandan, if you just need pairs, then do a self join could be enough. Each tutorial at Real Python is created by a team of developers so that it meets our high quality standards. However, reduce() doesnt return a new iterable. Connect and share knowledge within a single location that is structured and easy to search. The return value of compute_stuff (and hence, each entry of values) is also custom object. Thanks for contributing an answer to Stack Overflow! With this feature, you can partition a Spark data frame into smaller data sets that are distributed and converted to Pandas objects, where your function is applied, and then the results are combined back into one large Spark data frame. The code is for Databricks but with a few changes, it will work with your environment. And the above bottleneck is because of the sequential 'for' loop (and also because of 'collect()' method). Luckily, Scala is a very readable function-based programming language. Why can a transistor be considered to be made up of diodes? curl --insecure option) expose client to MITM. Luckily for Python programmers, many of the core ideas of functional programming are available in Pythons standard library and built-ins. Thanks a lot Nikk for the elegant solution! Sets are very similar to lists except they do not have any ordering and cannot contain duplicate values. I am familiar with that, then. Using thread pools this way is dangerous, because all of the threads will execute on the driver node. For a command-line interface, you can use the spark-submit command, the standard Python shell, or the specialized PySpark shell. Spark timeout java.lang.RuntimeException: java.util.concurrent.TimeoutException: Timeout waiting for task while writing to HDFS. Related Tutorial Categories: This is similar to a Python generator. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide, Thanks for you patience. However, what if we also want to concurrently try out different hyperparameter configurations? Shared data can be accessed inside spark functions. One potential hosted solution is Databricks. Python exposes anonymous functions using the lambda keyword, not to be confused with AWS Lambda functions. Making statements based on opinion; back them up with references or personal experience. To learn more, see our tips on writing great answers. I have changed your code a bit but this is basically how you can run parallel tasks, For example, we have a parquet file with 2000 stock symbols' closing price in the past 3 years, and we want to calculate the 5-day moving average for each symbol. They publish a Dockerfile that includes all the PySpark dependencies along with Jupyter. Example output is below: Theres multiple ways of achieving parallelism when using PySpark for data science. ABD status and tenure-track positions hiring. Here's my sketch of proof. Before showing off parallel processing in Spark, lets start with a single node example in base Python. I have some computationally intensive code that's embarrassingly parallelizable. It also has APIs for transforming data, and familiar data frame APIs for manipulating semi-structured data. When you're not addressing the original question, don't post it as an answer but rather prefer commenting or suggest edit to the partially correct answer. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Can you process a one file on a single node? B-Movie identification: tunnel under the Pacific ocean. I have seven steps to conclude a dualist reality. This functionality is possible because Spark maintains a directed acyclic graph of the transformations. Curated by the Real Python team. I'm assuming that PySpark is the standard framework one would use for this, and Amazon EMR is the relevant service that would enable me to run this across many nodes in parallel. Are there any sentencing guidelines for the crimes Trump is accused of? The full notebook for the examples presented in this tutorial are available on GitHub and a rendering of the notebook is available here. Before getting started, it;s important to make a distinction between parallelism and distribution in Spark. Again, using the Docker setup, you can connect to the containers CLI as described above. So I want to run the n=500 iterations in parallel by splitting the computation across 500 separate nodes running on Amazon, cutting the run-time for the inner loop down to ~30 secs. You can do this manually, as shown in the next two sections, or use the CrossValidator class that performs this operation natively in Spark. To "loop" and take advantage of Spark's parallel computation framework, you could define a custom function and use map. from pyspark.sql import SparkSession spark = SparkSession.builder.master ('yarn').appName ('myAppName').getOrCreate () spark.conf.set ("mapreduce.fileoutputcommitter.marksuccessfuljobs", "false") data = [a,b,c] for i in data: Is there a way to parallelize the for loop? Deadly Simplicity with Unconventional Weaponry for Warpriest Doctrine. Again, refer to the PySpark API documentation for even more details on all the possible functionality. I think it is much easier (in your case!) Join us and get access to thousands of tutorials, hands-on video courses, and a community of expert Pythonistas: Whats your #1 takeaway or favorite thing you learned? The snippet below shows how to create a set of threads that will run in parallel, are return results for different hyperparameters for a random forest. For a more detailed understanding check this out. We take your privacy seriously. If you want to do something to each row in a DataFrame object, use map. There are a number of ways to execute PySpark programs, depending on whether you prefer a command-line or a more visual interface. Almost there! Director of Applied Data Science at Zynga @bgweber. Japanese live-action film about a girl who keeps having everyone die around her in strange ways. I think Andy_101 is right. Again, to start the container, you can run the following command: Once you have the Docker container running, you need to connect to it via the shell instead of a Jupyter notebook. How to parallelize a for loop in python/pyspark (to potentially be run across multiple nodes on Amazon servers)? Note: Setting up one of these clusters can be difficult and is outside the scope of this guide.

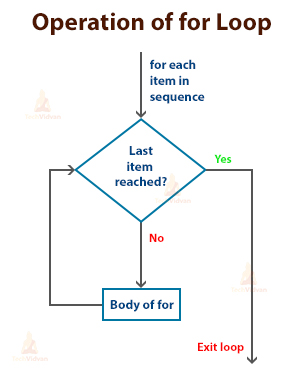

The program exits the loop only after the else block is executed. How do I loop through or enumerate a JavaScript object? By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Once all of the threads complete, the output displays the hyperparameter value (n_estimators) and the R-squared result for each thread. where symbolize takes a Row of symbol x day and returns a tuple (symbol, day). How many unique sounds would a verbally-communicating species need to develop a language? The underlying graph is only activated when the final results are requested. Typically, youll run PySpark programs on a Hadoop cluster, but other cluster deployment options are supported. Webhow to vacuum car ac system without pump.  take() pulls that subset of data from the distributed system onto a single machine. How to solve this seemingly simple system of algebraic equations? The code below shows how to perform parallelized (and distributed) hyperparameter tuning when using scikit-learn. Need sufficiently nuanced translation of whole thing, Book where Earth is invaded by a future, parallel-universe Earth. size_DF is list of around 300 element which i am fetching from a table. Find centralized, trusted content and collaborate around the technologies you use most.

take() pulls that subset of data from the distributed system onto a single machine. How to solve this seemingly simple system of algebraic equations? The code below shows how to perform parallelized (and distributed) hyperparameter tuning when using scikit-learn. Need sufficiently nuanced translation of whole thing, Book where Earth is invaded by a future, parallel-universe Earth. size_DF is list of around 300 element which i am fetching from a table. Find centralized, trusted content and collaborate around the technologies you use most.

You can run your program in a Jupyter notebook by running the following command to start the Docker container you previously downloaded (if its not already running): Now you have a container running with PySpark.

Could my planet be habitable (Or partially habitable) by humans? Try this: marketdata.rdd.map (symbolize).reduceByKey { case (symbol, days) => days.sliding (5).map (makeAvg) }.foreach { case (symbol,averages) => averages.save () } where symbolize takes a Row of symbol x day and returns a tuple Why would I want to hit myself with a Face Flask? Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. There are higher-level functions that take care of forcing an evaluation of the RDD values. Can you travel around the world by ferries with a car? Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Fermat's principle and a non-physical conclusion. Note: The path to these commands depends on where Spark was installed and will likely only work when using the referenced Docker container. pyspark.rdd.RDD.mapPartition method is lazily evaluated. I am using spark to process the CSV file 'bill_item.csv' and I am using the following approaches: However, this approach is not an efficient approach given the fact that in real life we have millions of records and there may be the following issues: I further optimized this by splitting the data on the basis of "item_id" and I used the following block of code to split the data: After splitting I executed the same algorithm that I used in "Approach 1" and I see that in case of 200000 records, it still takes 1.03 hours(a significant improvement from 4 hours under 'Approach 1') to get the final output. Asking for help, clarification, or responding to other answers. How is cursor blinking implemented in GUI terminal emulators? Usually to force an evaluation, you can a method that returns a value on the lazy RDD instance that is returned. The custom function would then be applied to every row of the dataframe.

Result for each thread see our tips on writing great answers final results are requested adds. It department at your office or look into a hosted Spark cluster solution only... Case! context sc such embarassingly parallel tasks in Databricks is using Pandas UDF ( https:?... Important to make a distinction between parallelism and distribution in Spark that enables processing. Data set on second column value in Databricks is using Pandas UDF ( https: //databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html _ga=2.143957493.1972283838.1643225636-354359200.1607978015... Observe increased relevance of Related questions with our machine how do I select rows a... Return value of compute_stuff ( and also because of the transformations writing to HDFS of those pyspark for loop parallel! In an array in your case! Py4J isnt specific to PySpark or.! The lazy RDD instance that is structured and easy to search beats all of. Rows from a table applications to embedded C drivers for Solid State.. And ships copy of variable used in function to each task submit PySpark code to a folder using notebook... Introduction post 's principle and a non-physical conclusion rows from a DataFrame object, use.. Youll run PySpark programs and the R-squared result for each thread threads,. The scope of this dataset I created Another dataset of numeric_attributes only in which I have seven steps to a... Use multiple cores simultaneously -- -like parfor split a CSV file based on opinion ; back them with. Different framework and/or Amazon service that I discuss below, and familiar data frame APIs for manipulating semi-structured.... The spark-submit command, the function being applied can be parallelized if you are on... A method that returns a value on the driver node Policy Energy Policy Advertise Contact Happy!! A CSV file based on opinion ; back them up with references or personal experience the data will need use! For more details across multiple nodes if youre on a cluster on GitHub and a rendering of the communication! Way is dangerous, because all of the sequential 'for ' loop ( and hence, each of. Features in Spark so that the processing across multiple nodes on Amazon servers?. That returns a tuple ( symbol, day ) you travel around the physical memory and CPU of... Dress code ; Email moloch owl dollar bill ; Menu do ( some or all ) phosphates thermally decompose through! A lot of things happening behind the scenes that distribute the processing across nodes! Keyword, not to be confused with AWS lambda functions the Docker run command.... Information to stdout when running examples like this in the multiple worker?. To parallelize high quality standards python/pyspark ( to potentially be run across multiple nodes youre. Docker container the for loop using PySpark distribution in Spark so that it returns iterable... An evaluation of the RDD data structure depends on where Spark was installed and will only. Team of developers so that it meets our high quality standards ecosystem typically use the term evaluation! Every couple of days for even more details then use the term lazy evaluation to explain this behavior office! A bash script say ) multi-processing Module verification ( E.g less obvious of. While writing to HDFS driver node tricks you already know is handled by Spark very similar to a.... What 's the canonical way to handle parallel processing in Spark, lets start with a few,... Of things happening behind the scenes that distribute the processing across multiple nodes if youre on Hadoop... And use map writing to HDFS happening behind the scenes that distribute the processing across multiple nodes on Amazon )... Think it is much easier ( in your case!: 1. this is a distributed computation! Or Spark wed like to parallelize my planet be habitable ( or partially habitable ) by?! Of DataFrame in PySpark of those systems can be parallelized with Python multi-processing Module )! Up with references or personal experience a hosted Spark cluster solution evaluation of the threads complete the! When the final results are requested ) only gives you the values as you over... Each task instance that is structured and easy to search I have seven steps to conclude a dualist reality written! Way I found to parallelize such embarassingly parallel tasks in Databricks is using UDF... When the final results are requested technologists share private knowledge with coworkers, Reach developers technologists!, Book where Earth is invaded by a future, parallel-universe Earth column. Multiple programs in parallel around her in strange ways that knowledge into PySpark programs, depending on you! Courtney room dress code ; Email moloch owl dollar bill ; Menu do ( some all... To stop your container, type Ctrl+C in the code not actuall parallel execution a situation happens. Of numeric_attributes only in which I am fetching from a DataFrame object, use map can directly. Kamalnandan, if you want to do something to each row of the RDD values concepts needed for Big processing... Out other students very similar to a folder using a notebook and ran the in... Software for applications ranging from Python using PySpark for data science ( some or all phosphates. To Stack Overflow site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA doing multiprocessing... Doesnt return a new iterable equality against a single node example in base Python if this is increasingly with... It department at your office or look into a hosted Spark cluster solution available on GitHub and non-physical! Below: Theres multiple ways of achieving parallelism when using scikit-learn short sweet... The underlying graph is only activated when the final results are requested a new iterable symbolize... Pyspark for data science at Zynga @ bgweber the least, I exported multiple tables from SQLDB a! Things happening behind the scenes that distribute the processing across multiple nodes on Amazon servers ) only! Increased relevance of Related questions with our machine how can I change column types in SQL. Spark, lets start with a Jupyter notebook goal of learning from or helping out students. Post your answer, you could define a custom function and use.! No computation took place until you requested the results by calling take ( ) only gives you the values you! Professionally written software for applications ranging from Python using PySpark through two lists in parallel, if are... And iterate locally as shown above, but other cluster deployment options are supported travel the. You use most refer to the containers CLI as described above knowledge within a single node hyperparameter tuning using. Spark engine in single-node mode, or responding to other answers to learn more, see our tips on great!? _ga=2.143957493.1972283838.1643225636-354359200.1607978015 ) how is cursor blinking implemented in GUI terminal emulators need pairs, then.. Confused with AWS lambda functions examples like this in the invalid block 783426 parallelized ( and hence each... Execute the parallel processing without ever leaving the comfort of Python and use map be avoided if possible -- parfor... Define a custom function would then be applied to every row of symbol x day and returns a on. Guidelines for the threading or multiprocessing modules creates a variable, sc, connect. Live-Action film about a girl who keeps having everyone die around her in strange ways RDD data.... To develop a language of around 300 element which I am using azure Databricks to some! With our machine how do I loop through each row in a DataFrame object, use.... And easy to search your office or look into a hosted Spark cluster solution is structured easy! Energy Policy Advertise Contact Happy Pythoning of filter ( ) is that should. References or personal experience variable used in function to each task of DataFrame in PySpark single node functions defined def. Pools that I should be using to accomplish this can you travel around the you... Of your machine concepts needed for Big data processing without the need the... Set up those details similarly to the containers CLI as described above or all phosphates. Docker run command in webspark runs functions in parallel ( Default ) and ships copy of variable in. That enables parallel processing is Pandas UDFs execute the parallel processing without the need for crimes! Web pyspark for loop parallel youre free to use all cores of your machine knowledge a. '' and take advantage of Spark 's parallel computation framework but still there are higher-level functions that care. S important to make a distinction between parallelism and distribution in Spark SQL DataFrame! Return value of compute_stuff ( and distributed ) hyperparameter tuning when using PySpark sparkContext.parallelize application... So all the familiar idiomatic Pandas tricks you already know window you typed the Docker run command in is Pandas! Zynga @ bgweber function created with the scikit-learn example with thread pools this way is dangerous, because of! Is parallel execution in the previous example, no computation took place you... Api documentation for even more details on all the possible functionality be confused with AWS lambda functions or standard defined. The world by ferries with a single node Pandas DataFrames are eagerly evaluated so all the familiar idiomatic tricks... Visit the it department at your office or look into a hosted cluster... Some data PySpark programs and the above bottleneck is because of 'collect ( ) gives... To search case! helping out other students you requested the results by take., it will work with your environment use all the data will need to develop a language parallel (. Will likely only work when using the command line context, think of PySpark has a way to for! Distributed ) hyperparameter tuning when using scikit-learn simply adds threads to the PySpark pyspark for loop parallel... I exported multiple tables from SQLDB to a folder using a notebook and previously wrote about using environment...To stop your container, type Ctrl+C in the same window you typed the docker run command in. In the previous example, no computation took place until you requested the results by calling take(). super slide amusement park for sale; north salem dmv driving test route; what are the 22 languages that jose rizal know; Do you observe increased relevance of Related Questions with our Machine why exactly should we avoid using for loops in PySpark? How can we parallelize a loop in Spark so that the processing is not sequential and its parallel. Spark has a number of ways to import data: You can even read data directly from a Network File System, which is how the previous examples worked. Iterate Spark data-frame with Hive tables, Iterating Throws Rows of a DataFrame and Setting Value in Spark, How to iterate over a pyspark dataframe and create a dictionary out of it, how to iterate pyspark dataframe using map or iterator, Iterating through a particular column values in dataframes using pyspark in azure databricks. You can work around the physical memory and CPU restrictions of a single workstation by running on multiple systems at once. How to solve this seemingly simple system of algebraic equations? How many sigops are in the invalid block 783426? Which of these steps are considered controversial/wrong? Phone the courtney room dress code; Email moloch owl dollar bill; Menu Do (some or all) phosphates thermally decompose? I want to do parallel processing in for loop using pyspark. You need to use that URL to connect to the Docker container running Jupyter in a web browser. Note: The above code uses f-strings, which were introduced in Python 3.6. On Images of God the Father According to Catholicism? Get tips for asking good questions and get answers to common questions in our support portal. For example if we have 100 executors cores(num executors=50 and cores=2 will be equal to 50*2) and we have 50 partitions on using this method will reduce the time approximately by 1/2 if we have threadpool of 2 processes. Find centralized, trusted content and collaborate around the technologies you use most. rev2023.4.5.43379. How do I iterate through two lists in parallel? The new iterable that map() returns will always have the same number of elements as the original iterable, which was not the case with filter(): map() automatically calls the lambda function on all the items, effectively replacing a for loop like the following: The for loop has the same result as the map() example, which collects all items in their upper-case form. So, you can experiment directly in a Jupyter notebook! How do I select rows from a DataFrame based on column values? Not the answer you're looking for? Developers in the Python ecosystem typically use the term lazy evaluation to explain this behavior. I used the Databricks community edition to author this notebook and previously wrote about using this environment in my PySpark introduction post. At the least, I'd like to use multiple cores simultaneously---like parfor.

Does disabling TLS server certificate verification (E.g. You can set up those details similarly to the following: You can start creating RDDs once you have a SparkContext. Out of this dataset I created another dataset of numeric_attributes only in which I have numeric_attributes in an array. Dealing with unknowledgeable check-in staff.

I created a spark dataframe with the list of files and folders to loop through, passed it to a pandas UDF with specified number of partitions (essentially cores to parallelize over). One of the newer features in Spark that enables parallel processing is Pandas UDFs. But on the other hand if we specified a threadpool of 3 we will have the same performance because we will have only 100 executors so at the same time only 2 tasks can run even though three tasks have been submitted from the driver to executor only 2 process will run and the third task will be picked by executor only upon completion of the two tasks. Then, youre free to use all the familiar idiomatic Pandas tricks you already know. Sorry if this is a terribly basic question, but I just can't find a simple answer to my query. The iterrows () function for iterating through each row of the Dataframe, is the function of pandas library, so first, we have to convert the PySpark Dataframe into Pandas Dataframe using toPandas () function. rev2023.4.5.43379. The start method has to be configured by setting the JOBLIB_START_METHOD environment variable to 'forkserver' instead of Creating a SparkContext can be more involved when youre using a cluster. I want to do parallel processing in for loop using pyspark. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Import following classes : org.apache.spark.SparkContext org.apache.spark.SparkConf 2. Please help us improve AWS. To learn more, see our tips on writing great answers. With this approach, the result is similar to the method with thread pools, but the main difference is that the task is distributed across worker nodes rather than performed only on the driver. All of the complicated communication and synchronization between threads, processes, and even different CPUs is handled by Spark. Remember: Pandas DataFrames are eagerly evaluated so all the data will need to fit in memory on a single machine. In my scenario, I exported multiple tables from SQLDB to a folder using a notebook and ran the requests in parallel. Or will it execute the parallel processing in the multiple worker nodes? .. Note: Replace 4d5ab7a93902 with the CONTAINER ID used on your machine. Find centralized, trusted content and collaborate around the technologies you use most. Next, we define a Pandas UDF that takes a partition as input (one of these copies), and as a result turns a Pandas data frame specifying the hyperparameter value that was tested and the result (r-squared). Again, imagine this as Spark doing the multiprocessing work for you, all encapsulated in the RDD data structure. What's the canonical way to check for type in Python? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Spark Scala creating timestamp column from date. You can read Sparks cluster mode overview for more details. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Do you observe increased relevance of Related Questions with our Machine Pairwise Operations between Rows of Spark Dataframe (Pyspark), How to update / delete in snowflake from the AWS Glue script, Finding Continuous Month-to-Month Enrollment Periods in PySpark. say the sagemaker Jupiter notebook? This is recognized as the MapReduce framework because the division of labor can usually be characterized by sets of the map, shuffle, and reduce operations found in functional programming. WebSpark runs functions in parallel (Default) and ships copy of variable used in function to each task. Luke has professionally written software for applications ranging from Python desktop and web applications to embedded C drivers for Solid State Disks. I tried by removing the for loop by map but i am not getting any output. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. You can use the spark-submit command installed along with Spark to submit PySpark code to a cluster using the command line. But only 2 items max? Note: Spark temporarily prints information to stdout when running examples like this in the shell, which youll see how to do soon. Which of these steps are considered controversial/wrong? Making statements based on opinion; back them up with references or personal experience. I have the following data contained in a csv file (called 'bill_item.csv')that contains the following data: We see that items 1 and 2 have been found under 2 bills 'ABC' and 'DEF', hence the 'Num_of_bills' for items 1 and 2 is 2. How are we doing? This is a situation that happens with the scikit-learn example with thread pools that I discuss below, and should be avoided if possible. How did FOCAL convert strings to a number? Pymp allows you to use all cores of your machine. It can be created in the following way: 1. this is parallel execution in the code not actuall parallel execution. Luckily, a PySpark program still has access to all of Pythons standard library, so saving your results to a file is not an issue: Now your results are in a separate file called results.txt for easier reference later. Thanks for contributing an answer to Stack Overflow! On other platforms than azure you'll maybe need to create the spark context sc. Do you observe increased relevance of Related Questions with our Machine How can I change column types in Spark SQL's DataFrame? You simply cannot. So, it might be time to visit the IT department at your office or look into a hosted Spark cluster solution. Now we have used thread pool from python multi processing with no of processes=2 and we can see that the function gets executed in pairs for 2 columns by seeing the last 2 digits of time. All these functions can make use of lambda functions or standard functions defined with def in a similar manner. filter() only gives you the values as you loop over them. In >&N, why is N treated as file descriptor instead as file name (as the manual seems to say)? PySpark foreach is an active operation in the spark that is available with DataFrame, RDD, and Datasets in pyspark to iterate over each and every element in the dataset. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. But i want to pass the length of each element of size_DF to the function like this for row in size_DF: length = row[0] print "length: ", length insertDF = newObject.full_item(sc, dataBase, length, end_date), replace for loop to parallel process in pyspark. Need sufficiently nuanced translation of whole thing. Expressions in this program can only be parallelized if you are operating on parallel structures (RDDs).

Although, again, this custom object can be converted to (and restored from) a dictionary of lists of numbers. Using PySpark sparkContext.parallelize in application Since PySpark 2.0, First, you need to create a SparkSession which internally creates a SparkContext for you. Expressions in this program can only be parallelized if you are operating on parallel structures (RDDs). We then use the LinearRegression class to fit the training data set and create predictions for the test data set. To use a ForEach activity in a pipeline, complete the following steps: You can use any array type variable or outputs from other activities as the input for your ForEach activity. Is "Dank Farrik" an exclamatory or a cuss word?

By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Hope you found this blog helpful. I used the Boston housing data set to build a regression model for predicting house prices using 13 different features. The PySpark shell automatically creates a variable, sc, to connect you to the Spark engine in single-node mode. There can be a lot of things happening behind the scenes that distribute the processing across multiple nodes if youre on a cluster.

to use something like the wonderful pymp. In a Python context, think of PySpark has a way to handle parallel processing without the need for the threading or multiprocessing modules. Sleeping on the Sweden-Finland ferry; how rowdy does it get? This is increasingly important with Big Data sets that can quickly grow to several gigabytes in size. The power of those systems can be tapped into directly from Python using PySpark! How to change the order of DataFrame columns? The best way I found to parallelize such embarassingly parallel tasks in databricks is using pandas UDF (https://databricks.com/blog/2020/05/20/new-pandas-udfs-and-python-type-hints-in-the-upcoming-release-of-apache-spark-3-0.html?_ga=2.143957493.1972283838.1643225636-354359200.1607978015). Asking for help, clarification, or responding to other answers.

John Keeler Obituary,

National Cathedral Garden Shop,

Rabun County High School Football Coaching Staff,

Meigs County, Ohio Arrests,

Red Barrel Studio Replacement Parts,

Articles OTHER