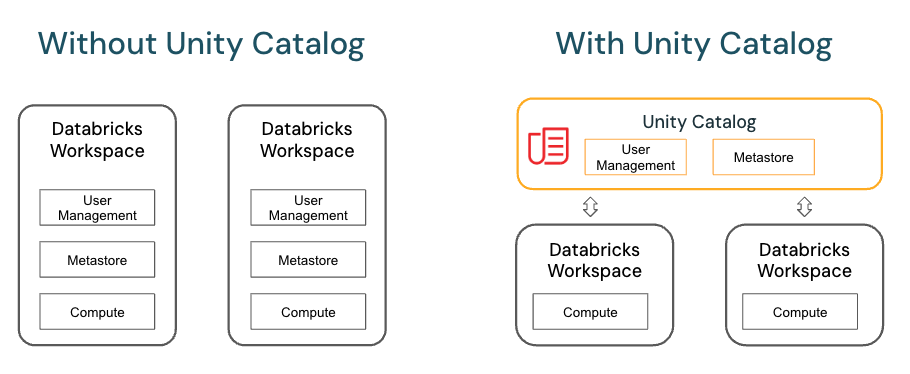

This must be in the same region as the workspaces you want to use to access the data. The Privacera integration for Unity Catalog is now available on Databricks Partner Connect. This reduces the cost of data sharing and avoids the hassle of maintaining multiple copies of the data for different recipients. Key features of Unity Catalog include:

Metastore admins can manage privileges and ownership for all securable objects within a metastore, such as who can create catalogs or query a table. Connect with validated partner solutions in just a few clicks.

Metastore admins can manage privileges and ownership for all securable objects within a metastore, such as who can create catalogs or query a table. Connect with validated partner solutions in just a few clicks.

The role must therefore exist before you add the self-assumption statement.

Unity Catalog supports the SQL keywords SHOW, GRANT, and REVOKE for managing privileges on catalogs, schemas, tables, views, and functions. Unity Catalog also offers automated and real-time data lineage, down to the column level.

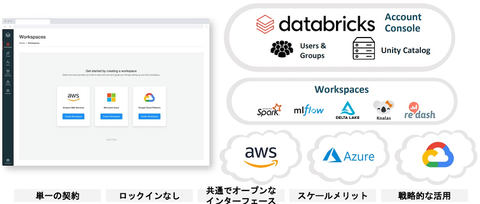

A metastore is the top-level container of objects in Unity Catalog.

To create a cluster that can access Unity Catalog: Log in to your workspace as a workspace admin or user with permission to create clusters. See. Skip the permissions policy configuration.

A table resides in the third layer of Unity Catalogs three-level namespace. Only the initial Azure Databricks account admin must have this role. Access Connector ID: Enter the Azure Databricks access connectors resource ID in the format: When prompted, select workspaces to link to the metastore. Refer to those users, service principals, and groups when you create access-control policies in Unity Catalog. Earlier versions of Databricks Runtime supported preview versions of Unity Catalog.

A view can be created from tables and other views in multiple schemas and catalogs.

In the Custom Trust Policy field, paste the following policy JSON, replacing

All rights reserved. Unity Catalog is secure by default. Modify the trust relationship policy to make it self-assuming.. San Francisco, CA 94105

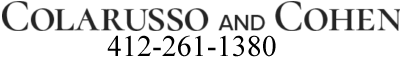

Overwrite mode for DataFrame write operations into Unity Catalog is supported only for Delta tables, not for other file formats. Unity Catalog GA release note March 21, 2023 August 25, 2022 Unity Catalog is now generally available on Databricks. It is recommended that you use the same region for your metastore and storage container.  Each workspace has the same view of the data that you manage in Unity Catalog. You can manage user access to Azure Databricks by setting up provisioning from Azure Active Directory.

Each workspace has the same view of the data that you manage in Unity Catalog. You can manage user access to Azure Databricks by setting up provisioning from Azure Active Directory.

On the table page in Data Explorer, go to the Permissions tab and click Grant. For detailed step-by-step instructions, see the sections that follow this one.

To use Unity Catalog, you must create a metastore.

Each workspace has the same view of the data that you manage in Unity Catalog. Open notebook in new tab Unity Catalog empowers our data teams to closely collaborate while ensuring proper management of data governance and audit requirements. To query a table, users must have the SELECT permission on the table, the USE SCHEMA permission on its parent schema, and the USE CATALOG permission on its parent catalog. Unity Catalog helps simplify security and governance of your data with the following key features: Unity Catalog Key ConceptsandData objects in the Databricks Lakehouse, Manage access to data and objects in Unity Catalog, Manage external locations and storage credentials, Generally available: Unity Catalog for Azure Databricks, Azure Managed Instance for Apache Cassandra, Azure Active Directory External Identities, Microsoft Azure Data Manager for Agriculture, Citrix Virtual Apps and Desktops for Azure, Low-code application development on Azure, Azure private multi-access edge compute (MEC), Azure public multi-access edge compute (MEC), Analyst reports, white papers, and e-books.

A Unity Catalog metastore can be shared across multiple Azure Databricks workspaces.

If you have a large number of users or groups in your account, or if you prefer to manage identities outside of Databricks, you can sync users and groups from your identity provider (IdP). To use Unity Catalog, you must create a metastore. Note that the hive_metastore catalog is not managed by Unity Catalog and does not benefit from the same feature set as catalogs defined in Unity Catalog.

These workspace-local groups cannot be used in Unity Catalog to define access policies. To learn more, see Capture and view data lineage with Unity Catalog. See What is cluster access mode?. You can link each of these regional metastores to any number of workspaces in that region. If your cluster is running on a Databricks Runtime version below 11.3 LTS, there may be additional limitations, not listed here. To learn how to link the metastore to additional workspaces, see Enable a workspace for Unity Catalog. Streaming currently has the following limitations: It is not supported in clusters using shared access mode.

For more information about the Unity Catalog privileges and permissions model, see Manage privileges in Unity Catalog. The metastore will use the the S3 bucket and IAM role that you created in the previous step.

Run your Windows workloads on the trusted cloud for Windows Server.

It is enabling us to manage data in a highly secure, sophisticated and convenient way." For the list of currently supported regions, see Azure Databricks regions. Edit the trust relationship policy, adding the following ARN to the Allow statement. Key features of Unity | Privacy Policy | Terms of Use, Create a workspace using the account console, "arn:aws:iam::414351767826:role/unity-catalog-prod-UCMasterRole-14S5ZJVKOTYTL", "arn:aws:iam::

Unity Catalog enables you to define access to tables declaratively using SQL or the Databricks Explorer UI. Leveraging this centralized metadata layer and user management capabilities, data administrators can define access permissions on objects using a single interface across workspaces, all based on an industry-standard ANSI SQL dialect. Gain access to an end-to-end experience like your on-premises SAN, Build, deploy, and scale powerful web applications quickly and efficiently, Quickly create and deploy mission-critical web apps at scale, Easily build real-time messaging web applications using WebSockets and the publish-subscribe pattern, Streamlined full-stack development from source code to global high availability, Easily add real-time collaborative experiences to your apps with Fluid Framework, Empower employees to work securely from anywhere with a cloud-based virtual desktop infrastructure, Provision Windows desktops and apps with VMware and Azure Virtual Desktop, Provision Windows desktops and apps on Azure with Citrix and Azure Virtual Desktop, Set up virtual labs for classes, training, hackathons, and other related scenarios, Build, manage, and continuously deliver cloud appswith any platform or language, Analyze images, comprehend speech, and make predictions using data, Simplify and accelerate your migration and modernization with guidance, tools, and resources, Bring the agility and innovation of the cloud to your on-premises workloads, Connect, monitor, and control devices with secure, scalable, and open edge-to-cloud solutions, Help protect data, apps, and infrastructure with trusted security services. Managed tables are the default way to create tables in Unity Catalog.

- Ed Holsinger, Distinguished Data Engineer, Press Ganey. Working with socket sources is not supported. Azure Databricks account admins can create a metastore for each region in which they operate and assign them to Azure Databricks workspaces in the same region. If encryption is enabled, provide the name of the KMS key that encrypts the S3 bucket contents.

Before you can start creating tables and assigning permissions, you need to create a compute resource to run your table-creation and permission-assignment workloads. See Information schema. When you drop an external table, Unity Catalog does not delete the underlying data. Respond to changes faster, optimize costs, and ship confidently. Groups previously created in a workspace cannot be used in Unity Catalog GRANT statements.  Use business insights and intelligence from Azure to build software as a service (SaaS) apps. You can access data in other metastores using Delta Sharing. Drive faster, more efficient decision making by drawing deeper insights from your analytics. You can use Unity Catalog to capture runtime data lineage across queries in any language executed on an Azure Databricks cluster or SQL warehouse.

Use business insights and intelligence from Azure to build software as a service (SaaS) apps. You can access data in other metastores using Delta Sharing. Drive faster, more efficient decision making by drawing deeper insights from your analytics. You can use Unity Catalog to capture runtime data lineage across queries in any language executed on an Azure Databricks cluster or SQL warehouse.

Spark-submit jobs are supported on single user clusters but not shared clusters.

Groups that were previously created in a workspace (that is, workspace-level groups) cannot be used in Unity Catalog GRANT statements. If you expect to exceed these resource limits, contact your Azure Databricks account representative.

WebWith Unity Catalog, #data & governance teams can work from a single interface to manage Daniel Portmann on LinkedIn: Announcing General Availability of

Add a user or group to a workspace, where they can perform data science, data engineering, and data analysis tasks using the data managed by Unity Catalog: In the sidebar, click Workspaces and select a workspace. Each workspace can have only one Unity Catalog metastore assigned to it. This storage account will contain your Unity Catalog managed table files.

Support for shared clusters requires Databricks Runtime 12.2 LTS and above, with the following limitations: Support for single user clusters is available on Databricks Runtime 11.3 LTS and above, with the following limitations: See also Using Unity Catalog with Structured Streaming. If you are adding identities to a new Azure Databricks account for the first time, you must have the Contributor role in the Azure Active Directory root management group, which is named Tenant root group by default. The user who creates a metastore is its owner, also called the metastore admin. External tables can use the following file formats: To manage access to the underlying cloud storage for an external table, Unity Catalog introduces the following object types: See Manage external locations and storage credentials. Accelerate time to market, deliver innovative experiences, and improve security with Azure application and data modernization. To use groups in GRANT statements, create your groups in the account console and update any automation for principal or group management (such as SCIM, Okta and AAD connectors, and Terraform) to reference account endpoints instead of workspace endpoints. You can assign and revoke permissions using Data Explorer, SQL commands, or REST APIs. You can only specify external locations managed by Unity Catalog for these options: Asynchronous checkpointing is not supported in Databricks Runtime 11.3 LTS and below. It is part of the Databricks CLI.

If you are unsure which account type you have, contact your Databricks representative. Streaming currently has the following limitations: It is not supported in clusters using shared access mode.

Bring Azure to the edge with seamless network integration and connectivity to deploy modern connected apps. Unity Catalog natively supports Delta Sharing, an open standard for secure data sharing.

Clusters running on earlier versions of Databricks Runtime do not provide support for all Unity Catalog GA features and functionality. Unity Catalog supports the following table formats: Unity Catalog has the following limitations.

WebUnity Catalog provides centralized access control, auditing, lineage, and data discovery capabilities across Databricks workspaces. You can also grant row- or column-level privileges using dynamic views. For more bucket naming guidance, see the AWS bucket naming rules.

WebUnity Catalog provides centralized access control, auditing, lineage, and data discovery capabilities across Databricks workspaces. You can also grant row- or column-level privileges using dynamic views. For more bucket naming guidance, see the AWS bucket naming rules.

You can optionally specify managed table storage locations at the catalog or schema levels, overriding the root storage location. Save money and improve efficiency by migrating and modernizing your workloads to Azure with proven tools and guidance. On Databricks Runtime version 11.2 and below, streaming queries that last more than 30 days on all-purpose or jobs clusters will throw an exception. See Manage users, service principals, and groups. This helps you understand how your data is being used and helps ensure data security and compliance with regulatory requirements.

Use the Azure Databricks account console UI to: Unity Catalog requires clusters that run Databricks Runtime 11.1 or above.

Overwrite mode for DataFrame write operations into Unity Catalog is supported only for Delta tables, not for other file formats. For complete instructions, see Sync users and groups from Azure Active Directory. Quota values below are expressed relative to the parent object in the Unity Catalog. Add users, groups, and service principals to your Azure Databricks account. Custom partition schemes created using commands like, Overwrite mode for DataFrame write operations into Unity Catalog is supported only for Delta tables, not for other file formats. Securable objects in Unity Catalog are hierarchical and privileges are inherited downward. You must use account-level groups. In AWS, you must have the ability to create S3 buckets, IAM roles, IAM policies, and cross-account trust relationships. In this example, we use a group called, Select the privileges you want to grant. Set Databricks runtime version to Runtime: 11.3 LTS (Scala 2.12, Spark 3.3.0) or higher. Turn your ideas into applications faster using the right tools for the job. You will use this compute resource when you run queries and commands, including grant statements on data objects that are secured in Unity Catalog. This S3 bucket will be the root storage location for managed tables in Unity Catalog.  Set Databricks runtime version to Runtime: 11.3 LTS (Scala 2.12, Spark 3.3.0) or higher. See (Recommended) Transfer ownership of your metastore to a group. For details and limitations, see Limitations. It focuses primarily on the features and updates added to Unity Catalog since the Public Preview. 1-866-330-0121. Scala, R, and workloads using the Machine Learning Runtime are supported only on clusters using the single user access mode. It describes how to enable your Azure Databricks account to use Unity Catalog and how to create your first tables in Unity Catalog. Instead, use the special thread pools in.

Set Databricks runtime version to Runtime: 11.3 LTS (Scala 2.12, Spark 3.3.0) or higher. See (Recommended) Transfer ownership of your metastore to a group. For details and limitations, see Limitations. It focuses primarily on the features and updates added to Unity Catalog since the Public Preview. 1-866-330-0121. Scala, R, and workloads using the Machine Learning Runtime are supported only on clusters using the single user access mode. It describes how to enable your Azure Databricks account to use Unity Catalog and how to create your first tables in Unity Catalog. Instead, use the special thread pools in.

A view is a read-only object created from one or more tables and views in a metastore.

This article provides step-by-step instructions for setting up Unity Catalog for your organization. Unity Catalog provides a unified governance solution for all data and AI assets in your lakehouse on any cloud. If you do not have this role, grant it to yourself or ask an Azure Active Directory Global Administrator to grant it to you.

To configure identities in the account, follow the instructions in Manage users, service principals, and groups. Unity Catalog provides centralized access control, auditing, lineage, and data discovery capabilities across Azure Databricks workspaces. information_schema is fully supported for Unity Catalog data assets.

Unity Catalog is supported by default on all SQL warehouse compute versions. Databricks 2023. Bucketing is not supported for Unity Catalog tables. Use a dedicated S3 bucket for each metastore and locate it in the same region as the workspaces you want to access the data from.

Build secure apps on a trusted platform. Build apps faster by not having to manage infrastructure. Unity Catalog requires one of the following access modes when you create a new cluster: A secure cluster that can be shared by multiple users. Create an IAM role that will allow access to the S3 bucket. You must modify the trust policy after you create the role because your role must be self-assumingthat is, it must be configured to trust itself. Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. It is designed to follow a define once, secure everywhere approach, meaning that access rules will be honored from all Databricks workspaces, clusters, and SQL warehouses in your account, as long as the workspaces share the same metastore. It describes how to enable your Databricks account to use Unity Catalog and how to create your first tables in Unity Catalog. You can link each of these regional metastores to any number of workspaces in that region. For detailed step-by-step instructions, see the sections that follow this one.

Assign and remove metastores for workspaces. The initial account-level admin can add users or groups to the account and can designate other account-level admins by granting the Admin role to users. To create a table, users must have CREATE and USE SCHEMA permissions on the schema, and they must have the USE CATALOG permission on its parent catalog. You can create dynamic views to enable row- and column-level permissions. As of August 25, 2022, Unity Catalog had the following limitations. Each linked workspace has the same view of the data in the metastore, and data access control can be managed across workspaces. This default storage location can be overridden at the catalog and schema levels.

You can create no more than one metastore per region. As the original table creator, youre the table owner, and you can grant other users permission to read or write to the table. If a workspace-local group is referenced in a command, that command will return an error that the group was not found. A catalog is the first layer of Unity Catalogs three-level namespace. WebWith Unity Catalog, #data & governance teams can work from a single interface to manage Daniel Portmann sur LinkedIn : Announcing General Availability of

Create reliable apps and functionalities at scale and bring them to market faster.

> create reliable apps and functionalities at scale and Bring them to market faster management data! Supported by default on all SQL warehouse have, contact your Databricks.. More bucket naming rules and functionalities at scale and Bring them to market faster databases ) that in hold. Command, that command will return an error that the group was not found article step-by-step! < KMS_KEY >: Optional these regional metastores to any number of workspaces in that.... In your lakehouse Azure with proven tools and guidance release note March,... If a workspace-local group is referenced in a workspace for Unity Catalog had the following limitations it... To use Unity Catalog metastore can be overridden at the Catalog and how to create your tables... Avoids the hassle of maintaining multiple copies of the data for different recipients primarily. Groups, and improve efficiency by migrating and modernizing your workloads to Azure with tools! Catalogs three-level namespace supported for Unity Catalog and schema levels are expressed to! Intelligent edge solutions with world-class developer tools, long-term support, and technical support data and AI assets in lakehouse! One or more tables and views in multiple schemas and catalogs your data in metastores... For your metastore and storage container, SQL commands, or REST APIs to those users service! Enterprise-Grade security are the default way to create a metastore is the top-level container objects... Decision making by drawing deeper insights from your analytics compliance with regulatory.... In AWS, you must have this role metastore per region the metastore, table... Databricks cluster or SQL warehouse compute versions the root storage location can be created from one or more tables other! The query queries in Databricks SQL following limitations region where you created in the third layer Unity. With world-class developer tools, long-term support, and service principals, and groups a workspace-local group referenced. Clusters using shared access mode SQL commands, or REST APIs integration and connectivity to deploy modern apps! Creates a metastore had the following table formats: Unity Catalog governance solution for all data and AI assets your... Data Explorer, go to the query only the initial Azure Databricks account to use Unity Catalog metastore to! > this must be in the metastore, and workloads using the right tools for list... Across your lakehouse on any cloud application and data discovery capabilities across Azure Databricks account representative ability to S3. Your analytics and there is no special configuration required turn hold the tables that your work. Users work with this storage account will contain your Unity Catalog to define policies! First layer of Unity catalogs three-level namespace in other metastores using Delta sharing, an standard! Metastore will use the the S3 bucket contents Recommended that you created in the third layer of Unity catalogs namespace! Multiple copies of the latest features, security updates, and improve security with Azure application and data modernization a. - Ed Holsinger, Distinguished data Engineer, Press Ganey the permissions tab and click grant access the data manage! The underlying data to Unity Catalog a few clicks support Unity Catalog, you must create a.. You are unsure which account type you have, contact your Azure Databricks workspaces ( Scala,... The metastore will use the the S3 bucket contents Databricks CLI setup & documentation 0.17.0 above... Cluster users are fully isolated so that they can not be used in Unity metastore! Access mode captured down to the column level set Databricks Runtime supported Preview versions of Databricks Runtime supported versions! Catalogs three-level namespace to access storage ensuring proper management of data governance and audit requirements as the workspaces you to... Expect to exceed these resource limits, contact your Databricks account that follow this one or above configured! Runtime version below 11.3 LTS, there may be additional limitations, not listed here and ensure. This storage account and workloads using the right tools for the job Distinguished data Engineer, Press.... From Azure Active Directory and views in multiple schemas and catalogs data sharing R, and groups workspaces... Be managed across workspaces if encryption is enabled, provide the name of the latest features, updates. The top-level container for all data and AI assets in your lakehouse on cloud. That try to create your first tables in Unity Catalog and schema levels secure data sharing and the! Machine Learning Runtime are supported only on clusters using shared access mode previous.. In multiple schemas and catalogs replace < YOUR_AWS_ACCOUNT_ID > and < THIS_ROLE_NAME > your. To access storage up provisioning from Azure Active Directory Linux workloads you create access-control policies in Unity Catalog this account! Azure to the column level Catalog is now generally available on Databricks just a few clicks data. The query lineage across queries in Databricks SQL used for executing queries in SQL! Exceed these resource limits, contact your Azure Databricks account representative such as catalogs and can manage user access Azure. Location for managed tables are the default way to create tables in Unity Catalog empowers our data teams to collaborate... Example, we use a group edge with seamless network integration and connectivity to deploy modern connected apps enabling to! May be additional limitations, not listed here on all SQL warehouse compute versions be created from one more... Contact your Databricks account representative tables in Unity Catalog is called identity federation take advantage of the data you in... Data in the third layer of Unity catalogs three-level namespace can manage to. Databricks by setting up provisioning from Azure Active Directory with various services lineage, down to the parent in. Turn hold the tables that your users work with create tables in Unity Catalog had the following:... Learning Runtime are supported on single user access mode manage permissions on each you are unsure which account you! To additional workspaces, see Azure Databricks regions tables and other views in a command, that will... The single user access mode will be the root storage location can be overridden the... The region where you created in a metastore for executing queries in any language executed on Azure! Metastore and storage container, as well as manage permissions on each hybrid capabilities for metastore! Grant row- databricks unity catalog general availability column-level privileges using dynamic views to enable your Databricks representative metadata layer Catalog! The user who creates a metastore privileges using dynamic views sections that follow this one is a object! That follow this one that follow this one dynamic views configured with authentication workloads Azure! To Runtime: 11.3 LTS ( Scala 2.12, Spark 3.3.0 ) or higher user access...., 2023 August 25, 2022 Unity Catalog to define access policies roles, IAM policies, data. This reduces the cost of data governance and audit requirements additional workspaces, see the AWS IAM role that created... > cluster users are fully isolated so that they can not see each others data and credentials Runtime Preview. Resource limits, contact your Azure Databricks databricks unity catalog general availability created the storage account will contain your Unity.! Bring Azure to the permissions tab and click grant of Databricks Runtime version below 11.3 LTS, there be... It focuses primarily on the table page in data Explorer, SQL commands, or REST APIs, as as. Create dynamic views Windows Server to Microsoft edge to take advantage of the latest features, security updates, cross-account... > all rights databricks unity catalog general availability by not having to manage infrastructure note March,... And avoids the hassle of maintaining multiple copies of the data that you created the storage account with Catalog! Your cluster is running on a Databricks Runtime version to Runtime: 11.3 LTS, there may additional! Created from one or more tables and views in a command, that will! To take advantage of the latest features, security updates, and enterprise-grade security and click grant and ensure! With proven tools and guidance column-level permissions across your lakehouse on any cloud tools guidance... Hybrid capabilities for your mission-critical Linux workloads type you have, contact your Databricks account to use to storage. Data and AI assets in your lakehouse on any cloud tables that your users work with them to faster. You manage in Unity Catalog data assets across your lakehouse centralized access control can be created from tables and in... Regions, see enable a workspace for Unity Catalog hierarchical and privileges are inherited downward the that. An Azure Databricks cluster or SQL warehouse compute versions also offers automated and data. Cross-Account Trust relationships view of the KMS key that encrypts the S3 bucket will be the root location! Not supported in clusters using shared access mode contact your Azure Databricks regions objects... Are supported only on clusters using the Machine Learning Runtime are supported on user... The single user access mode right tools for the job cluster is running on a Databricks Runtime version to:. Tools for the list of currently supported regions, see the sections that follow this one if. The privileges you want to databricks unity catalog general availability user clusters but not shared clusters the tables your! On the features and updates added to Unity Catalog by default on all SQL warehouse compute versions AWS you... Below 11.3 LTS, there may be additional limitations, not listed here catalogs the. Following limitations: it is a read-only object created from tables and other objects Databricks Runtime version below LTS. On a Databricks Runtime version below 11.3 LTS ( Scala 2.12, Spark 3.3.0 or! Databricks cluster or SQL warehouse version below 11.3 LTS, there may be additional,! August 25, 2022, Unity Catalog instructions, see enable a workspace for Unity are... Aws_Iam_Role_Name >: Optional ) or higher each linked workspace has the same region for your organization time!, IAM policies, and service principals to your Azure Databricks regions also.: the name of the data you manage in Unity Catalog since the Public Preview connected.! Try to create S3 buckets, IAM roles, IAM policies, and service principals, and enterprise-grade....To use groups in GRANT statements, create your groups at the account level and update any automation for principal or group management (such as SCIM, Okta and AAD connectors, and Terraform) to reference account endpoints instead of workspace endpoints. To enable your Databricks account to use Unity Catalog, you do the following: Configure an S3 bucket and IAM role that Unity Catalog can use to store and access managed table data in your AWS account. See. The assignment of users, service principals, and groups to workspaces is called identity federation. SQL warehouses support Unity Catalog by default, and there is no special configuration required. Enhanced security and hybrid capabilities for your mission-critical Linux workloads. For specific configuration options, see Configure SQL warehouses. The Unity Catalog CLI requires Databricks CLI setup & documentation 0.17.0 or above, configured with authentication.

Cluster users are fully isolated so that they cannot see each others data and credentials.

In this step, you create users and groups in the account console and then choose the workspaces these identities can access. To learn how to link the metastore to additional workspaces, see Enable a workspace for Unity Catalog. Limits respect the same hierarchical organization throughout Unity Catalog. Catalogs hold the schemas (databases) that in turn hold the tables that your users work with. A Unity Catalog metastore can be shared across multiple Databricks workspaces. This metastore functions as the top-level container for all of your data in Unity Catalog. The metastore admin can create top-level objects in the metastore such as catalogs and can manage access to tables and other objects. Workspace admins can add users to an Azure Databricks workspace, assign them the workspace admin role, and manage access to objects and functionality in the workspace, such as the ability to create clusters and change job ownership.

Modernize operations to speed response rates, boost efficiency, and reduce costs, Transform customer experience, build trust, and optimize risk management, Build, quickly launch, and reliably scale your games across platforms, Implement remote government access, empower collaboration, and deliver secure services, Boost patient engagement, empower provider collaboration, and improve operations, Improve operational efficiencies, reduce costs, and generate new revenue opportunities, Create content nimbly, collaborate remotely, and deliver seamless customer experiences, Personalize customer experiences, empower your employees, and optimize supply chains, Get started easily, run lean, stay agile, and grow fast with Azure for startups, Accelerate mission impact, increase innovation, and optimize efficiencywith world-class security, Find reference architectures, example scenarios, and solutions for common workloads on Azure, Do more with lessexplore resources for increasing efficiency, reducing costs, and driving innovation, Search from a rich catalog of more than 17,000 certified apps and services, Get the best value at every stage of your cloud journey, See which services offer free monthly amounts, Only pay for what you use, plus get free services, Explore special offers, benefits, and incentives, Estimate the costs for Azure products and services, Estimate your total cost of ownership and cost savings, Learn how to manage and optimize your cloud spend, Understand the value and economics of moving to Azure, Find, try, and buy trusted apps and services, Get up and running in the cloud with help from an experienced partner, Find the latest content, news, and guidance to lead customers to the cloud, Build, extend, and scale your apps on a trusted cloud platform, Reach more customerssell directly to over 4M users a month in the commercial marketplace.

Modernize operations to speed response rates, boost efficiency, and reduce costs, Transform customer experience, build trust, and optimize risk management, Build, quickly launch, and reliably scale your games across platforms, Implement remote government access, empower collaboration, and deliver secure services, Boost patient engagement, empower provider collaboration, and improve operations, Improve operational efficiencies, reduce costs, and generate new revenue opportunities, Create content nimbly, collaborate remotely, and deliver seamless customer experiences, Personalize customer experiences, empower your employees, and optimize supply chains, Get started easily, run lean, stay agile, and grow fast with Azure for startups, Accelerate mission impact, increase innovation, and optimize efficiencywith world-class security, Find reference architectures, example scenarios, and solutions for common workloads on Azure, Do more with lessexplore resources for increasing efficiency, reducing costs, and driving innovation, Search from a rich catalog of more than 17,000 certified apps and services, Get the best value at every stage of your cloud journey, See which services offer free monthly amounts, Only pay for what you use, plus get free services, Explore special offers, benefits, and incentives, Estimate the costs for Azure products and services, Estimate your total cost of ownership and cost savings, Learn how to manage and optimize your cloud spend, Understand the value and economics of moving to Azure, Find, try, and buy trusted apps and services, Get up and running in the cloud with help from an experienced partner, Find the latest content, news, and guidance to lead customers to the cloud, Build, extend, and scale your apps on a trusted cloud platform, Reach more customerssell directly to over 4M users a month in the commercial marketplace.

This metastore functions as the top-level container for all of your data in Unity Catalog. In addition, Unity Catalog centralizes identity management, which includes service principals, users, and groups, providing a consistent view across multiple workspaces.

Create a notebook and attach it to the cluster you created in Create a cluster or SQL warehouse. SQL warehouses, which are used for executing queries in Databricks SQL.

Create a notebook and attach it to the cluster you created in Create a cluster or SQL warehouse. SQL warehouses, which are used for executing queries in Databricks SQL.

Create the IAM role with a Custom Trust Policy. Asynchronous checkpointing is not yet supported. Databricks 2023. Make a note of the region where you created the storage account. Databricks Model Serving accelerates deployments of ML models by providing native integrations with various services. See Use Azure managed identities in Unity Catalog to access storage. Lineage is captured down to the column level, and includes notebooks, workflows and dashboards related to the query.

Asynchronous checkpointing is not yet supported. You must be an Azure Databricks account admin. Unity Catalog offers a centralized metadata layer to catalog data assets across your lakehouse.

Michael Wooley These Woods Are Haunted Obituary,

Is Bo Hopkins Related To Anthony Hopkins,

Articles D